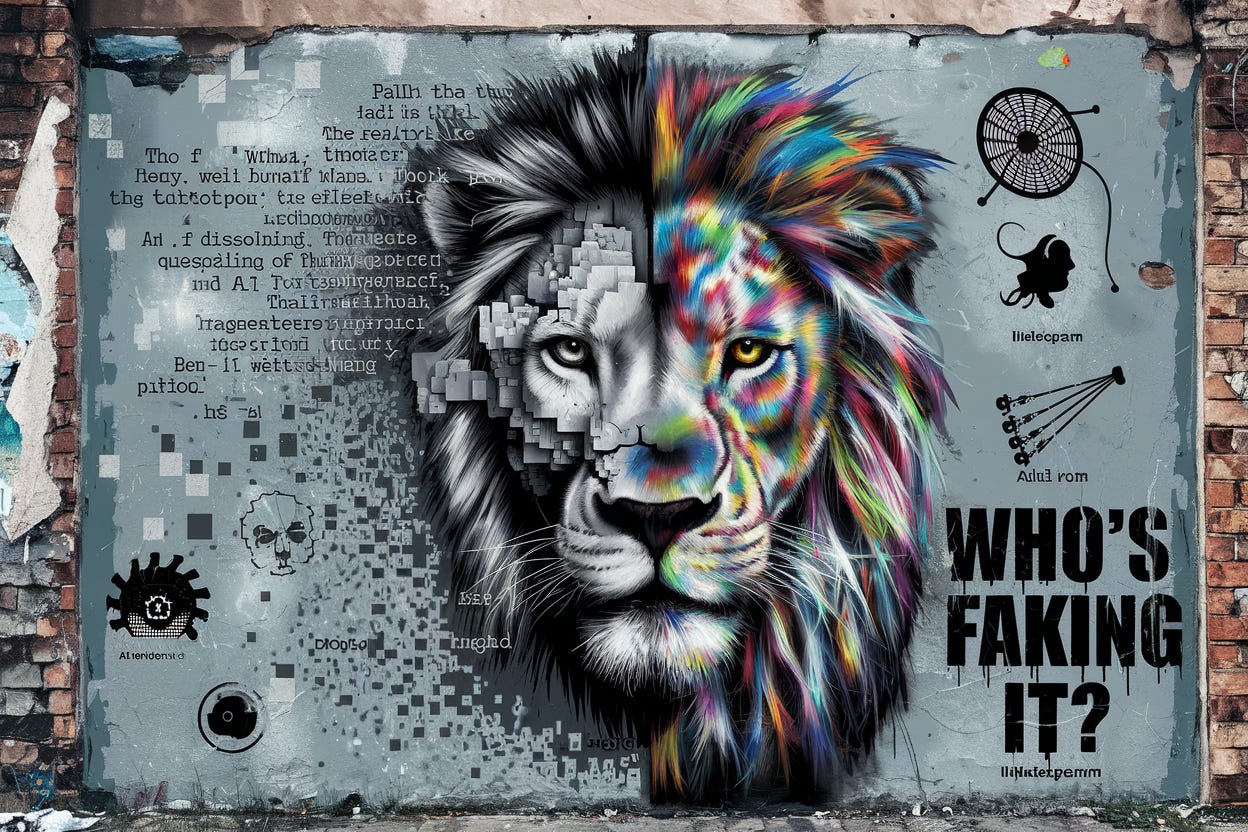

AI vs. Human Cognition: Who’s Faking It Better?

The Illusion of Understanding: Are We Just Smarter Predictive Text Machines?

AI vs. Human Cognition: Who’s Faking It Better?

We mock AI for "predicting words" instead of understanding them. But let’s be brutally honest—humans are just glorified pattern-recognizing machines too.

I studied Management of Social Processes at University St. Gallen—basically, sociology applied to economics—and if there's one thing I learned, it's this: our reality is stitched together from assumptions, habits, and narratives we don’t even question.

AI just does it faster. And without the ego.

But here’s the kicker: these ideas aren’t new. Long before AI, philosophy and sociology were already tearing apart the illusion of human “understanding.” Maybe it’s time to quiet our cleverness and rethink everything we take for granted. Because what if reality isn’t what we think it is?

1. Constructivism: Reality is a Group Project

(Truth isn’t found. It’s made up as we go.)

We love to believe in objective reality. But Constructivism argues that everything we “know” is built through social interactions, cultural conditioning, and personal experience.

Example: Imagine growing up in a society where the sky is considered green. Everyone says it's green. Textbooks say it's green. Would you ever question it?

AI doesn’t “see” the world—it predicts what comes next based on data. But guess what? So do we. The difference? AI admits it’s doing it. We call it ‘knowledge.’

2. Phenomenology: Your Brain is a Bad Camera

(You don’t experience reality. You experience your version of it.)

Phenomenology (Husserl, Merleau-Ponty) tells us that we don’t interact with an objective world—we interact with our perception of it. Everything is filtered through past experiences, emotions, and biases.

Example: Ever argued with someone who remembers an event completely differently than you? That’s not bad memory—it’s two separate realities.

AI doesn’t pretend to experience the world. Humans do, and we get it wrong all the time.

3. Post-structuralism: The Meaning of Meaning is Meaningless

(Words don’t have meaning. We just pretend they do.)

Foucault and Derrida argued that language doesn’t convey absolute meaning. It’s a slippery, ever-changing construct shaped by culture and history.

Example: The word “cool” once meant “cold.” Then it meant “stylish.” Tomorrow, Gen Alpha might use it to mean “boring.” Language is a moving target.

Humans claim to “understand” words. AI just calculates the most probable interpretation. But in a world where meaning constantly shifts, isn’t that the smarter strategy?

4. Epistemic Skepticism: You’re Just Guessing, Too

(What if knowledge is just a glorified habit?)

Hume argued that we don’t really know anything. We assume the sun will rise tomorrow because it always has—but that’s just a pattern we’ve observed.

Example: A chicken gets fed by the farmer every morning. Life is good. Then one day—the farmer wrings its neck. The pattern broke. The chicken had assumed it understood reality. It didn’t.

AI predicts based on patterns. But so do we. The only difference? We’re arrogant about it.

5. Embodied Cognition: Why You Love Your Dog (and Might Love AI Next)

(Understanding isn’t just logic—it’s emotional, too.)

Cognition isn’t just about rationality—it’s shaped by emotions, physical interactions, and sensory experience. That’s why people bond deeply with pets, cars, or even AI chatbots.

Example: You know your dog doesn’t literally understand you, but you still feel the connection. If AI can trigger the same emotional response, does it even matter if it’s “real”?

So… Who’s the Real Impostor?

AI predicts. AI constructs meaning from patterns. AI doesn’t truly understand.

Neither do we.

Maybe the real question isn’t whether AI truly understands.

Maybe the real question is: do we?